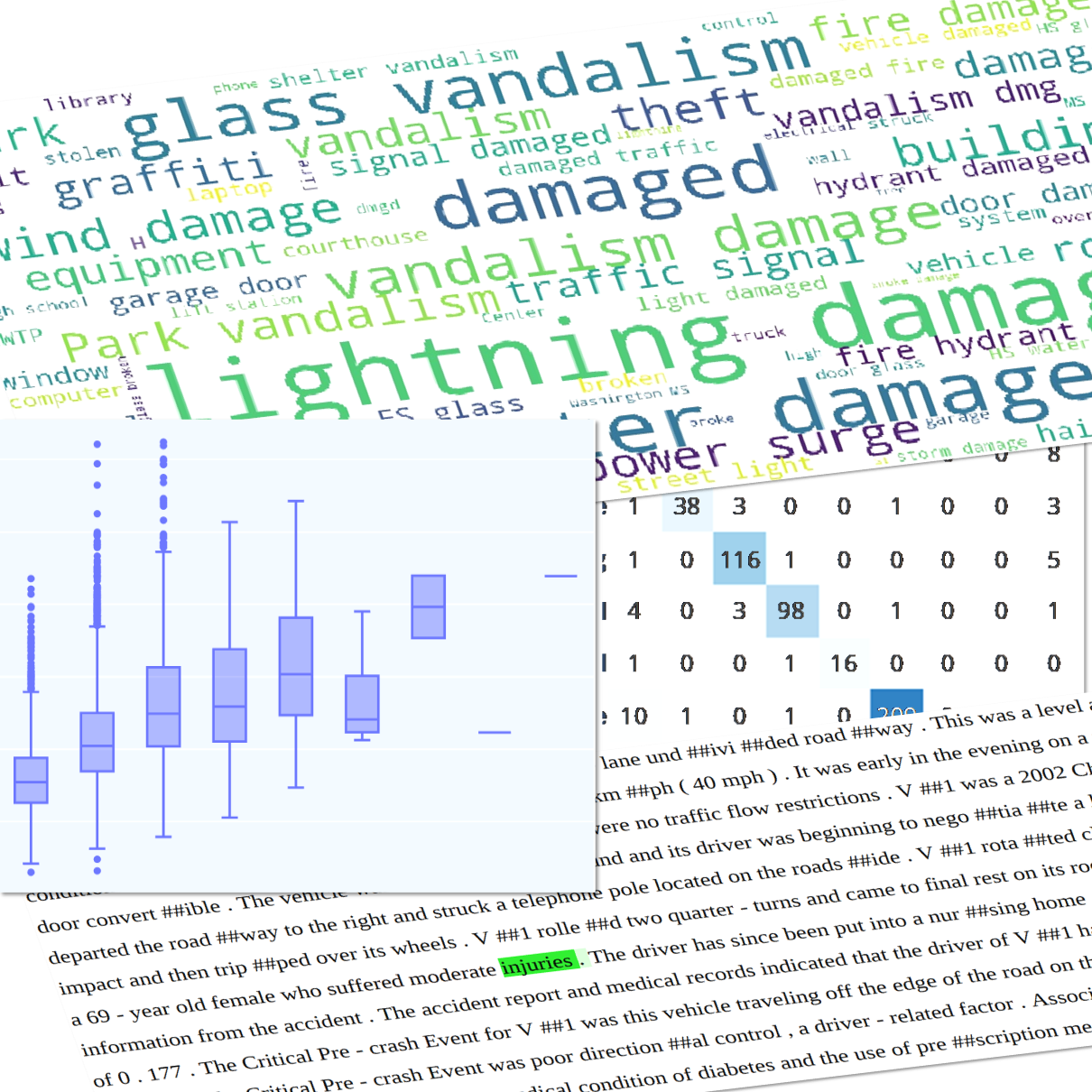

Have a look at the latest tutorial by Andreas Troxler and Jürg Schelldorfer! It discusses actuarial applications of natural language processing (NLP) using transformers.

NLP challenges

Practical applications of natural language processing (NLP) often come with a number of challenges. In an actuarial or insurance context, typical challenges are:

- The text corpus may use terminology that is not comonly used and understood by typical NLP models. That is, it may be domain-specific.

- Multiple languages might be present simultaneously.

- Text sequences might be short and ambiguous. Or they might be so long that it is hard to find out which parts are relevant to the task!

- The amount of training data may be relatively small. Even worse, it might be expensive to gather large amounts of input texts and annotate them with the target label.

- It is important to understand why an NLP model arrives at a particular prediction.

The approach to NLP

This tutorial illustrates techniques to address these challenges. First, it explains the key concepts underlying modern NLP techniques, with a focus on methods employing transformer-based models. These concepts are then applied to two datasets which are typical in actuarial applications:

- car accident descriptions with an average length of 400 words, available in English and German; and

- short property insurance claims descriptions in English.

Results

The results achieved by using the language-understanding skills of NLP models off-the-shelf, with only minimal pre-processing and fine-tuning, clearly demonstrate the power of transfer learning for practical applications.

In short, the case studies demonstrate that using state-of-the-art NLP techniques is simple, effective, and does not require large amounts of coding.

Delivery

The tutorial is shipped together with notebooks which lead through the Python implementation. All files, including the datasets and notebooks, are available on github.

Check it out!

Keywords

Natural language processing, NLP, transformer, multi-lingual models, domain-specific fine-tuning, integrated gradients, extractive question answering, zero-shot classification, topic modeling.